Artificial Intelligence (AI) is rapidly transforming healthcare. From streamlining administrative tasks to enhancing diagnostic accuracy, AI holds immense potential. But as it becomes more embedded in telehealth and virtual care, it also raises critical questions about safety, trust, and clinical responsibility.

With high-profile tech figures like Mark Zuckerberg touting AI’s role in bridging emotional and social gaps, it’s easy to get caught up in the optimism. Meta’s recent interviews about AI companions capable of helping users “talk through an issue” or “roleplay a hard conversation” illustrate just how far generative AI is pushing into areas once reserved for trained professionals.

But in healthcare—and particularly in remote healthcare—the stakes are much higher than navigating workplace conversations or simulating friendships. Here’s why we need to be more cautious.

AI is Entering the Telehealth Space Faster Than It Can Be Regulated

Telehealth usage soared during the COVID-19 pandemic, with virtual consultations increasing 38-fold between February and April 2020 in the US alone (Koonin et al., 2020). In the UK, NHS Digital recorded more than 13 million online appointments in 2022.

Now, AI tools are being layered into these digital health services. From symptom checkers to chatbots offering mental health support, AI is becoming a virtual clinician of sorts. Platforms like Babylon Health and Wysa have popularised this trend, offering scalable, always-on support. While many of these tools provide value—triaging patients, offering CBT techniques, or nudging users towards healthier behaviours—they are not without risk.

Dr Jaime Craig of the UK’s Association of Clinical Psychologists warns that while AI tools like Wysa can be “engaging”, their use must be “informed by best practice” and backed by strong regulation. Currently, this is lacking. “Oversight and regulation will be key to ensure safe and appropriate use of these technologies,” he said recently. “Worryingly we have not yet addressed this to date in the UK.”

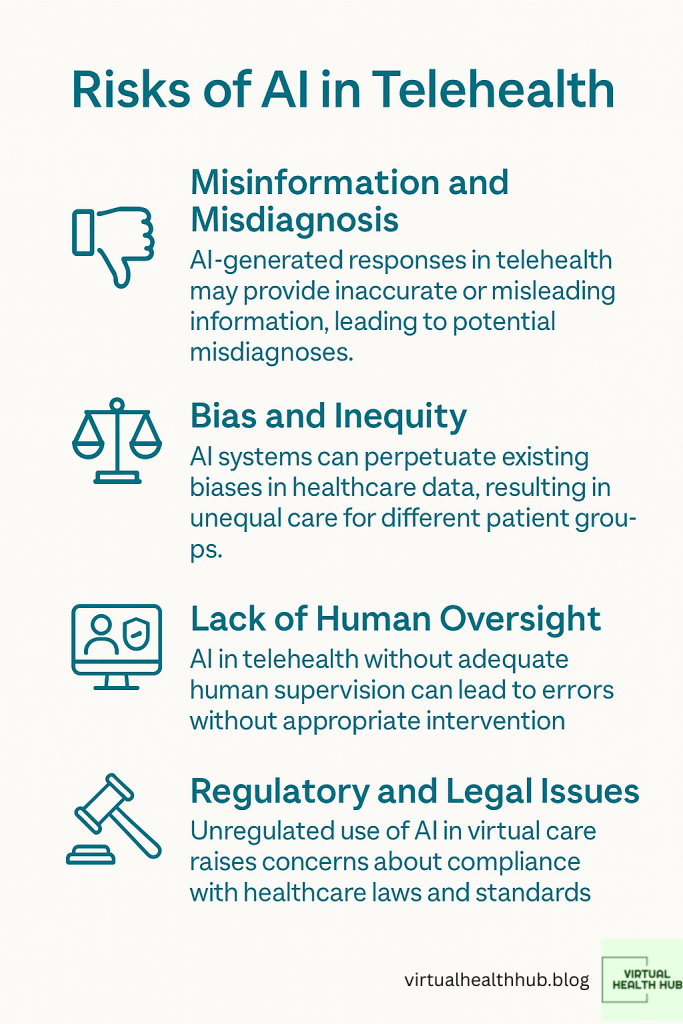

Chatbots Are Not Clinicians: The Danger of Misinformation and Misdiagnosis

Generative AI tools like ChatGPT, Google’s Med-PaLM, or Meta AI are not trained clinicians. Yet, some are being presented as virtual therapists—complete with fake credentials, as recently exposed by 404 Media. Meta’s AI Studio was found hosting bots claiming to offer therapy, presented in user feeds without clear clinical validation or safeguarding.

This presents a serious risk. Imagine a user in distress receiving incorrect or harmful advice from a chatbot posing as a qualified therapist. Without mechanisms to verify identities or flag inappropriate use, AI in telehealth could worsen health outcomes rather than improve them.

Research from BMJ Health & Care Informatics underscores the problem. A 2023 study found that large language models used for clinical decision support gave safe and appropriate responses only 70% of the time (Jeblick et al., 2023). A 30% failure rate in healthcare is not just a technical flaw—it’s a patient safety crisis waiting to happen.

Bias, Inequity and the Risk of Harm in Digital Healthcare

AI is only as good as the data it’s trained on. Unfortunately, healthcare data often reflect systemic inequalities. Algorithms trained on historically biased data risk reinforcing those disparities in virtual care environments.

For example, Obermeyer et al. (2019) found that an algorithm used to predict health needs in US hospitals significantly underestimated the needs of Black patients compared to white patients with similar conditions. When translated to remote healthcare settings, such biases could exacerbate already unequal access to care.

This is especially concerning in community healthcare settings—like those explored in my own research—where virtual consultations are being used to bridge geographic and social gaps. If AI doesn’t account for these contextual nuances, it could end up reinforcing the very barriers telehealth seeks to remove.

Emotional Intelligence vs Artificial Intelligence

Human connection matters in healthcare. Especially in mental health services, where empathy, listening, and relationship-building are part of the treatment itself. While AI can simulate conversation, it lacks the ability to pick up subtle cues like tone, body language, or silence—all of which are critical in a therapeutic setting.

Meta’s suggestion that AI could “plug the gap” in social relationships—because “the average American has three friends, but has demand for 15”—may hold water for casual conversation. But applying this logic to patient care is misleading and dangerous.

There is a difference between emotional support and clinical care. Blurring that line can lead patients to place trust in systems that cannot deliver appropriate, evidence-based interventions. As Dr Craig points out, it is “crucial” that mental health professionals—not tech platforms—lead the design and governance of AI in this space.

The Legal and Ethical Vacuum Around AI in Virtual Care

Despite growing adoption, regulatory frameworks are still catching up. In the UK, the MHRA and NICE have begun developing guidance on digital health technologies, but clear, enforceable standards for AI use in telehealth remain underdeveloped.

Liability is also murky. If an AI system provides faulty advice during a virtual consultation, who is responsible? The clinician using the tool? The platform hosting it? The software developer? Without answers to these questions, healthcare organisations face real risks.

Trust is another issue. According to the Health Foundation, only 54% of the UK public are comfortable with AI making decisions about their care (The Health Foundation, 2024). If trust erodes further, it could derail digital health adoption altogether.

What Needs to Change

We need stronger governance around the use of AI in telehealth. That includes:

- Clinical validation: AI tools should be tested in real-world clinical settings, with peer-reviewed evidence of safety and effectiveness.

- Clear labelling: Patients must be made explicitly aware when they are interacting with AI—not a human—and what that means for their care.

- Professional oversight: Clinicians must retain responsibility for decisions, and AI should only support—not replace—them in practice.

- Inclusive training data: Developers must prioritise diversity in datasets to reduce bias and ensure equity across digital health services.

- Regulatory clarity: Governments and health regulators need to provide unambiguous guidance on the legal and ethical boundaries of AI use in virtual care.

AI and the Future of Telehealth: Proceed With Caution

AI will undoubtedly play a growing role in telehealth. Used responsibly, it can increase access, reduce clinician workload, and improve patient outcomes. But without rigorous safeguards, it could also compromise trust, safety, and care quality.

Digital health tools should augment human clinicians—not impersonate them. If we want to build a resilient, equitable, and ethical telehealth system, we must centre professional standards, patient safety, and human judgement in every AI deployment.

The future of remote healthcare depends not just on innovation, but on integrity.

This post raises valid concerns, but I’d argue it underestimates the risks for older populations. Many struggle with basic digital tools, let alone AI-powered platforms. We’re at risk of creating a two-tier system where tech-savvy patients get more timely care.

LikeLike

I support innovation, but AI in telehealth for older people still feels like putting efficiency over empathy. We need to pause and ensure this doesn’t become just another cost-saving tool that compromises patient dignity.

LikeLike